Facebook has published its internal enforcement guidelines.

These guidelines — or community standards, as they’re also known — are designed to help human moderators decide what content should (not) be allowed on Facebook. Now, the social network wants the public to know how such decisions are made.

“We decided to publish these internal guidelines for two reasons,” wrote Facebook’s VP of Global Product Management Monica Bickert in a statement. “First, the guidelines will help people understand where we draw the line on nuanced issues.”

“Second,” the statement continues, “providing these details makes it easier for everyone, including experts in different fields, to give us feedback so that we can improve the guidelines – and the decisions we make – over time.”

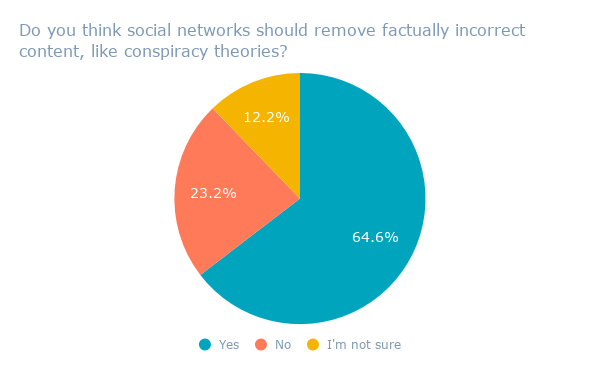

Facebook’s content moderation practices have been the topic of much discussion and, at times, contention. At CEO Mark Zuckerberg’s congressional hearings earlier this month, several lawmakers asked about the removal or suppression of certain content that they believed was based on political orientation.

And later this week, the House Judiciary Committee will host yet another hearing on the “filtering practices of social media platforms,” where witnesses from Facebook, Google, and Twitter have been invited to testify — though none have confirmed their attendance.

What the Standards Look Like

According to a tally from The Verge reporter Casey Newton, the newly-released community standards total 27 pages, and are divided into six main sections:

- Violence and Criminal Behavior

- Safety

- Objectionable Content

- Integrity and Authenticity

- Respecting Intellectual Property

- Content-Related Requests

Within these sections, the guidelines delve deeper into the moderation of content that might promote or indicate things like threats to public safety, bullying, self-harm, and “coordinating harm.”

That last item is particularly salient for many, following the publication of a New York Times report last weekend on grave violence in Sri Lanka that is said ignited at least in part by misinformation spread on Facebook within the region.

According to that report, Facebook lacks the resources to combat this weaponization of its platform, due to some degree to its lack of Sinhalese-speaking moderators (one of the most common languages in which this content appears).

As promised, I wanted to briefly explain why I hope anyone who uses Facebook — in any country — will make time for our Sunday A1 story on how the newsfeed algorithm has helped to provoke a spate of violence, as we’ve reconstructed in Sri Lanka: https://t.co/hrpBSMfb4t

— Max Fisher (@Max_Fisher) April 23, 2018

But beyond that, many sources cited in the story say that even when this violence-inciting content is reported or flagged by concerned parties on Facebook, they’re told that it doesn’t violate community standards.

Within the guidelines published today, an entire page is dedicated to “credible violence,” which includes “statements of intent to commit violence against any person, groups of people, or place (city or smaller)” — which describes much of the violence reported in the New York Times story that Facebook was allegedly used to incite.

Bickert wrote in this morning’s statement that Facebook’s Community Operations team — which has 7,500 content reviewers (and which the company has said it hopes to more than double this year) — currently oversees these reports and does so in over 40 languages.

Whether that includes Sinhalese and other languages spoken in the regions affected by the violence described in the New York Times report was not specified.

A New Appeals Process

The public disclosure of these community standards will be followed by the rollout of a new appeals project, Bickert wrote, that will allow users and publishers to contest decisions made about the removal of content they post.

To start, the statement says, appeals will become available to those whose content was “removed for nudity/sexual activity, hate speech or graphic violence.” Users whose content is taken down will be notified and provided with the option to request further review into the decision.

That review will be done by a human moderator, Bickert wrote, usually within a day. And if it’s been determined that the decision was made in error, it will be reversed and the content will be re-published.

In the weeks leading up to Zuckerberg’s congressional testimony earlier this month, Facebook released numerous statements about its policies and

There’s some probability that this most recent statement was made, this appeals process introduced, and these standards published in anticipation of potential questions being asked at this week’s hearings.

In addition to the aforementioned House Judiciary Committee hearing on “filtering practices” tomorrow, Facebook CTO Mike Schroepfer will testify before UK Parliament’s Digital, Culture, Media

How or if this statement and the content review guidelines are raised within these events remain to be seen — but it’s important to again note that one reason for their release, according to Bickert, was to allow feedback from the public, whether it’s comprised of daily Facebook users or topical experts.

It’s possible that in the wake of Facebook’s unfolding and continuous scrutiny, all of its policies will continue to evolve.

Featured image credit: Facebook

Deje su comentario