For the past several months, Facebook has been on a seemingly endless campaign to associate its name with greater transparency: of its terms, its privacy policies, and how it approaches and ranks the content shared on its site.

Today, Axios reports that the latest suite of changes is coming: this time, in the form of required labels for issue-based ads.

Much of that began last

That scrutiny was only heightened in

It was addressed in several ways, including CEO Mark Zuckerberg testifying before Congress for two days of back-to-back hearings. And in the days leading up to that testimony, Facebook unveiled a number of reactive changes, including updates to its ad verification and labeling policies.

A Look at Facebook’s Changing Ad Policies

Where the Recent Changes Began

In October, Facebook said that only verified advertisers — those who submitted a government-issued ID and provided a physical mailing address to the company — would be permitted to run electoral ads, like those for a specific candidate running for office.

In April, it said that those ads would also come with a “Political Ad” label, specifying such details as who paid for it.

That same month, Facebook announced that this verification process would also apply to ads pertaining to issues that are often the greatest sources of debate during elections. But the announcement didn’t indicate that those ads would require labels –not yet, anyway.

New Labels for Issue-Based Ads

But as Axios reports, Facebook now plans to roll out such a requirement for ads that are based on hotly-contested issues, especially during election seasons.

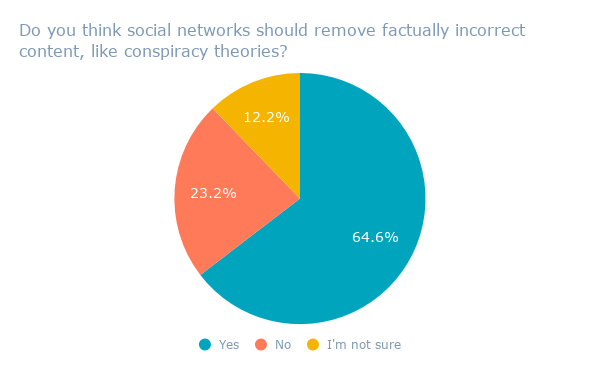

And while the verification of advertisers behind this promoted content was a strong first step, the labeling of it could become even more imperative, as many credit issue-based content as having played the biggest role in the aforementioned efforts to influence U.S. elections.

When this verification was first announced last month, Facebook said it would partner with third parties to comprehensively determine what those issues are — a list that Axios says includes topics like gun control, immigration policies, and “values.”

Transparency Loves Company

The report comes on the heels of an announcement from Google last week that it, too, would roll out new policies for U.S. election ads. That would begin with a verification process similar to Facebook’s, as well as the future release of a Transparency Report that will delve deeper into who’s funding election ads on Google, and how much these individuals or groups are spending on them.

The transparency efforts will also include what Google calls “a searchable library for election ads,” which looks to be similar to the Political Ad Archive Facebook announced in April. That archive, Facebook says, will store the full text and imagery of any and all ads with the “Political Ad” label, as well as the number of impressions it received and demographic information about the audience it reached.

It’s important to note that Facebook was not the only online platform said to be weaponized for the spread of misinformation — Google, too, has faced its fair share of criticism for the same thing, which could be one motivation behind these latest ad transparency initiatives.

YouTube — which is owned by Google — has experienced its own challenges with the spread of divisive content, which CEO Susan Wojcicki addressed at SXSW in March.

And Twitter, which has come under fire for serving as a network rife with contention-spreading bots and bullying, has said that it’s undergoing efforts to address, measure, and improve the health of its platform.

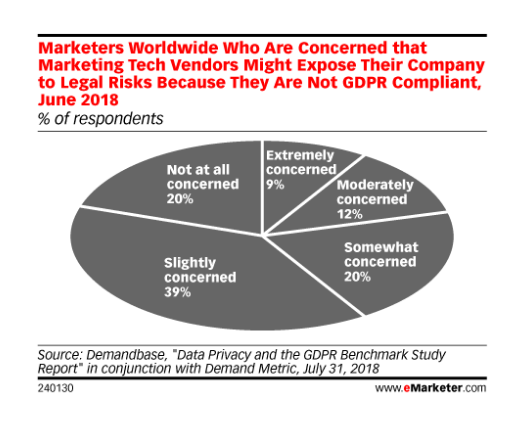

Transparency and ethics are reverberating themes throughout the tech industry as of late. In light of the fallout experienced by Facebook from these various items — like misinformation and data privacy — it, along with many of its peers in the sector, has been going out of their way to make bold statements on ethics.

At F8 — Facebook’s annual developer conference held last week — ethics took center stage when discussing certain topics, like how the growth of artificial intelligence is forcing developers to address the bias of machine learning, for example.

And at Microsoft Build yesterday, CEO Satya Nadella set the tone for the opening keynote by proclaiming early on, “Privacy is a human right.”

Many expect similar themes to echo throughout Google’s own annual developer conference, I/O, which kicks off today with an opening keynote at 1:00 PM EST.

We’ll be covering the keynote later today, so stay tuned for those developments — and others that emerge along this undercurrent of tech responsibility.

Deje su comentario